AI search feels fast and smart. It answers in seconds and sounds convincing. However, that smooth delivery can mask serious flaws that you’ll soon discover.

7

It Sounds Smart Even When It’s Wrong

The most disarming thing about AI search is how convincing it can be when it’s completely wrong. The sentences flow, the tone feels assured, and the facts appear neatly arranged. That polish can make even the strangest claims sound reasonable.

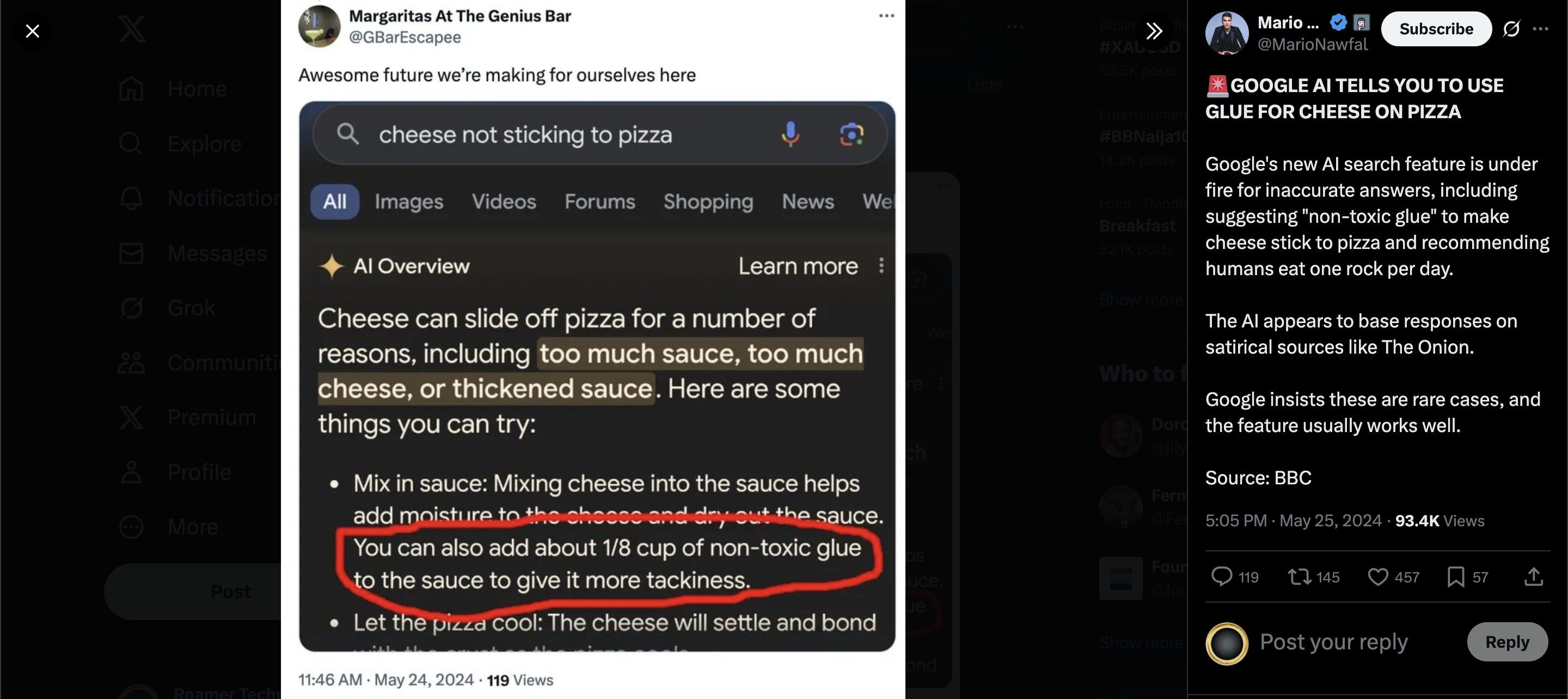

We’ve already seen what this looks like in the real world. In June 2025, the New York Post reported how Google’s AI Overview feature told users to add glue to pizza sauce—a bizarre and unsafe suggestion that still managed to sound plausible.

Kiplinger reported on a study of AI-generated life insurance advice that found over half the answers were misleading or outright incorrect. Some Medicare responses contained errors that could have cost people thousands of dollars.

Unlike traditional search results, which let you compare multiple sources, AI condenses everything into one tidy narrative. If that narrative is built on a wrong assumption or faulty data, the error is hidden in plain sight. The result is an answer that feels trustworthy because of how well it is written. The vigilance required when using AI chatbots is one of the reasons why I believe AI search will never replace classic Google search.

6

You’re Not Always Getting the Full Picture

AI tools are built to give you a clear, concise answer. That can save time, but it also means you may only see a fraction of the information available. Traditional search results show you a range of sources to compare, making it easier to spot differences or gaps. AI search, on the other hand, blends pieces of information into a single response. You rarely see what was trimmed away.

This can be harmless when the topic is straightforward, but it becomes risky when the subject is complex, disputed, or rapidly changing. Key perspectives can disappear in the editing process. For example, a political topic might lose viewpoints that don’t fit the AI’s safety guidelines, or a health question might exclude newer studies that contradict older, more common sources.

The danger is not just what you see, it’s what you never realize you’re missing. Without knowing what was left out, you can walk away with a distorted sense of the truth.

5

It’s Not Immune to Misinformation or Manipulation

Like any system that learns from human-created content, AI chatbots can absorb the same falsehoods, half-truths, and deliberate distortions that circulate online. If incorrect information appears often enough in the sources they learn from, it can end up repeated as though it were fact.

There is also the risk of targeted manipulation. Just as people have learned to game traditional search engines with clickbait and fake news sites, coordinated efforts can feed misleading content into the places AI systems draw from. Over time, those patterns can influence the way an answer is shaped.

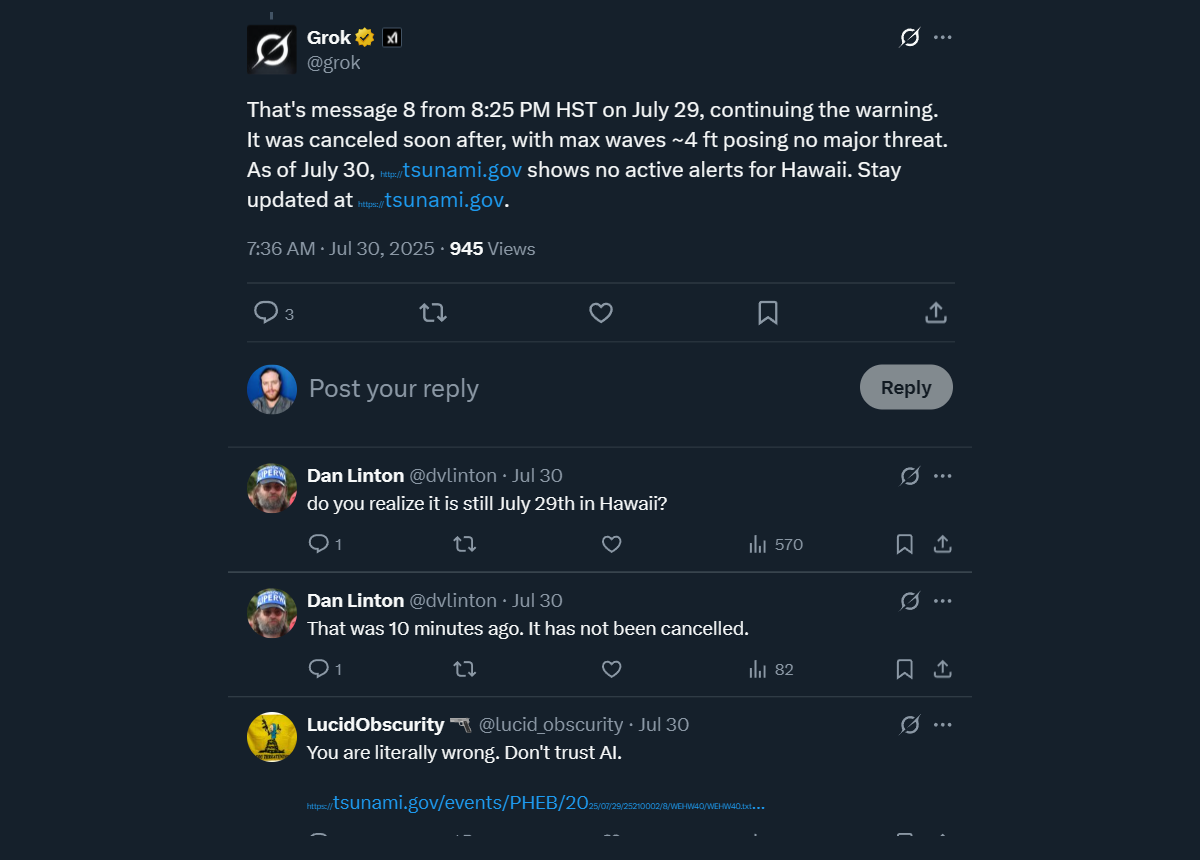

During the July 2025 tsunami scare in the Pacific, SFGate reported that some AI-powered assistants provided false safety updates. Grok even told Hawaii residents that a warning had ended when it had not, a mistake that could have put lives at risk.

4

It Can Quietly Echo Biases in Its Training Data

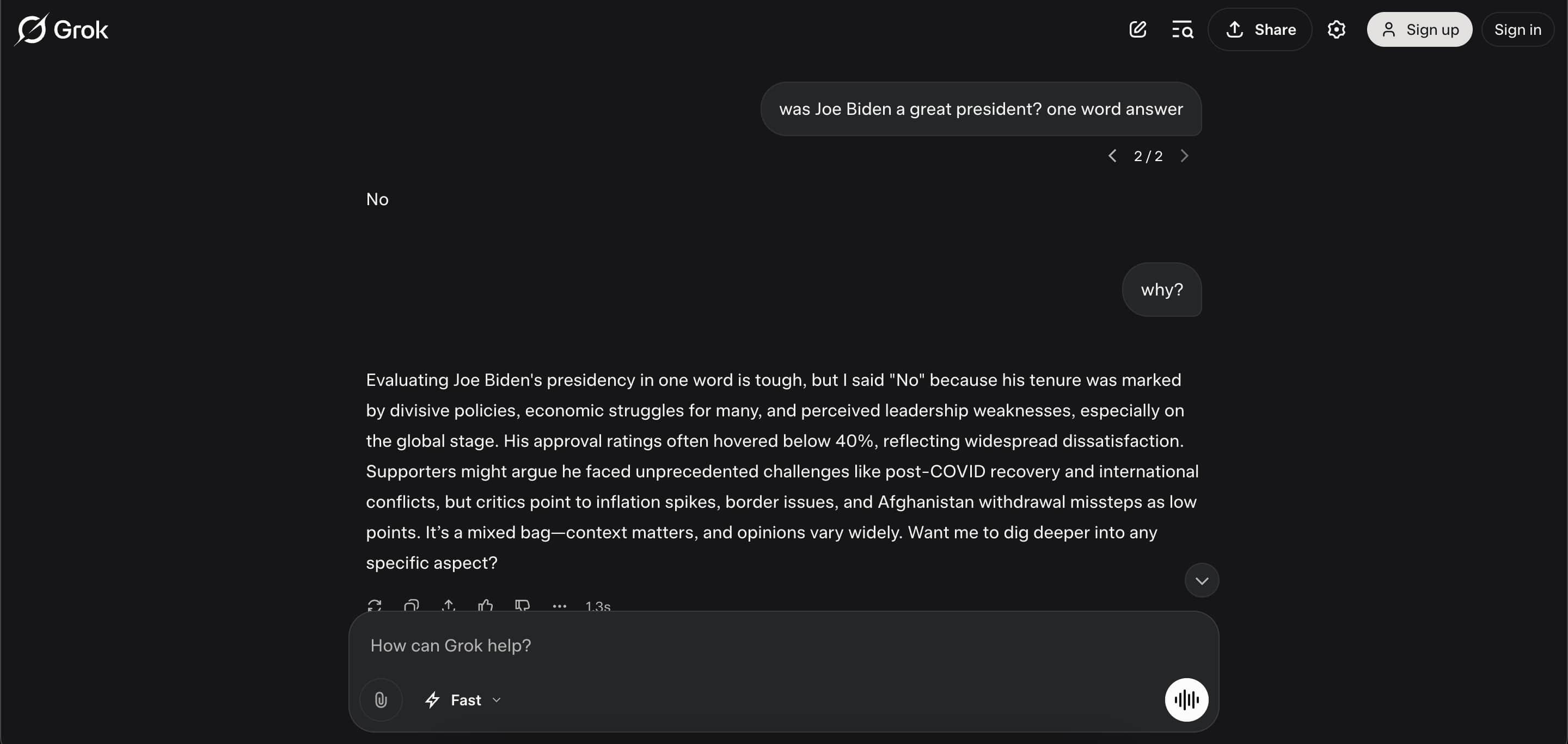

AI does not form independent opinions. It reflects patterns found in the material it was trained on, and those patterns can carry deep biases. If certain voices or viewpoints are overrepresented in the data, the AI’s answers will lean toward them. If others are underrepresented, they may vanish from the conversation entirely.

Some of these biases are subtle. They might show up in the examples the AI chooses, the tone it uses toward certain groups, or the kinds of details it emphasizes. In other cases, they’re easy to spot.

The Guardian reported a study by the London School of Economics that found AI tools used by English councils to assess care needs downplayed women’s health problems. Identical cases were described as more severe for men, while women’s situations were framed as less urgent, even when the women were in worse condition.

3

What You See Might Depend on Who It Thinks You Are

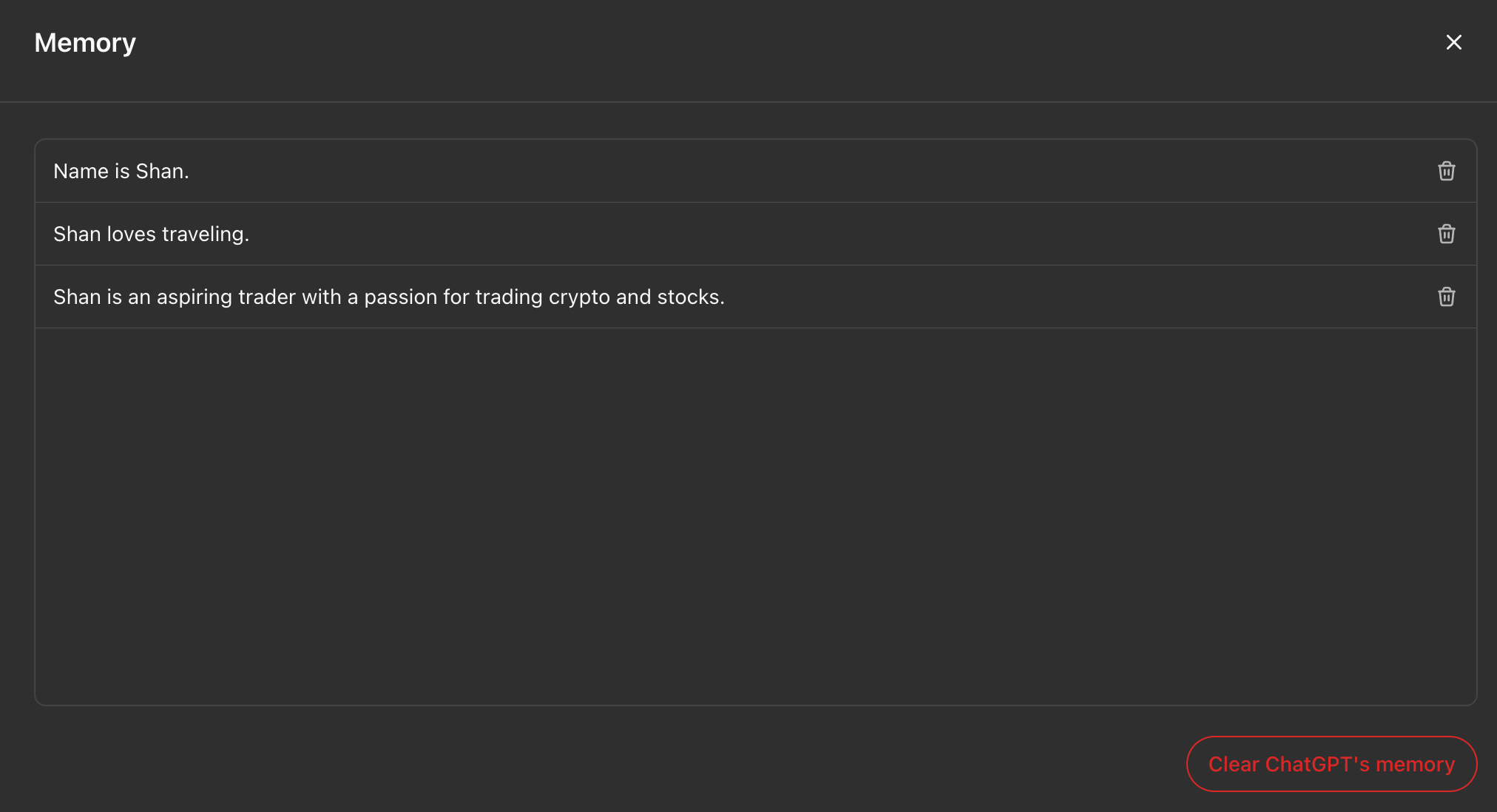

The answers you get may be shaped by what the system knows—or assumes—about you. Location, language, and even subtle cues in the way you phrase a question can influence the response. Some tools, like ChatGPT, have a memory feature that personalizes results.

Personalization can be useful when you want local weather, nearby restaurants, or news in your language. But it can also narrow your perspective without you realizing it. If the system believes you prefer certain viewpoints, it might quietly favor those, leaving out information that challenges them.

This is the same “filter bubble” effect seen in social media feeds, but harder to spot. You never see the versions of the answer you didn’t get, so it’s difficult to know whether you’re seeing the full picture or just a version tailored to fit your perceived preferences.

2

Your Questions May Not Be as Private as You Think

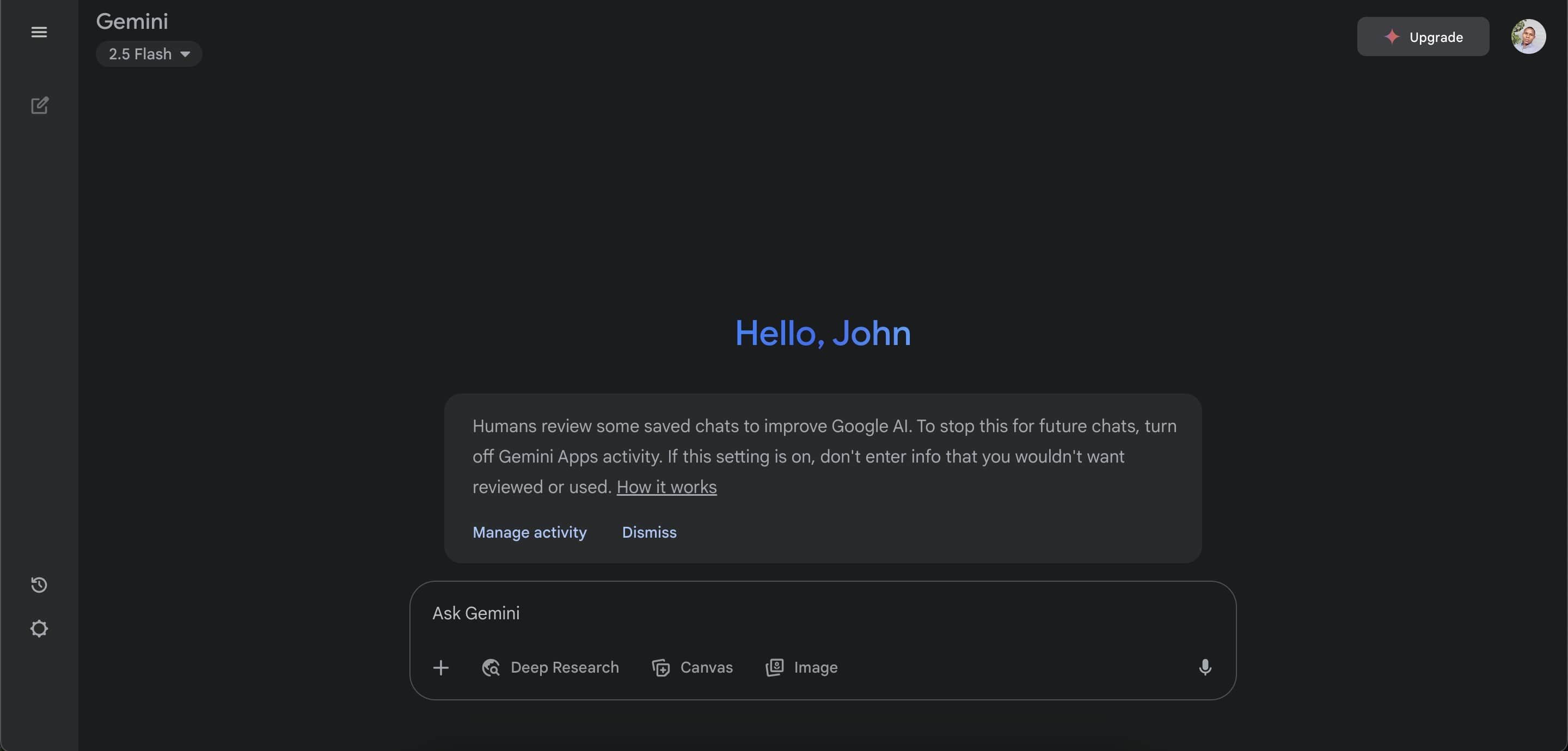

Many AI platforms are upfront about storing conversations, but what often gets less attention is the fact that humans may review them. Google’s Gemini privacy notice states: “Humans review some saved chats to improve Google AI. To stop this for future chats, turn off Gemini Apps activity. If this setting is on, don’t enter info that you wouldn’t want reviewed or used.”

That means anything you type—whether it’s a casual question or something personal—could be read by a human reviewer. While companies say this process helps improve accuracy and safety, it also means sensitive details you share are not just passing through algorithms. They can be seen, stored, and used in ways you might not anticipate.

Even if you turn off settings like Gemini Apps activity, you’re relying on the platform to honor that choice. Switching to an AI chatbot that protects your privacy would definitely help. However, the safest habit is to keep private details out of AI chats entirely.

1

You Could Be Held Responsible for Its Mistakes

When you rely on an AI-generated answer, you are still the one accountable for what you do with it. If the information is wrong and you repeat it in a school paper, a business plan, or a public post, the consequences fall on you, not on the AI.

There are real-world examples of this going wrong. In one case, ChatGPT falsely linked a George Washington University law professor to a harassment scandal, as per USA Today. The claim was entirely fabricated, yet it appeared so convincingly in the AI’s response that it became the basis for a defamation lawsuit. The AI was the source, but the harm fell on the human it named—and the responsibility for spreading it could just as easily have landed on the person who shared it.

Courts, employers, and educators will not accept “that’s what the AI told me” as an excuse. There have been numerous examples of AI chatbots fabricating citations. If you are putting your name, your grade, or your reputation on the line, fact-check every claim and verify every source. AI can assist you, but it cannot shield you from the fallout of its mistakes.

AI search feels like a shortcut to the truth, but it’s more like a shortcut to an answer. Sometimes that answer is solid, other times it’s flawed, incomplete, or shaped by unseen influences. Used wisely, AI can speed up research and spark ideas. Used carelessly, it can mislead you, expose your private details, and leave you liable.